Prediction Markets Win Again

To me, it's clear that prediction/betting markets won this election cycle once again. (I'll use "prediction markets" to refer to both in this post.)

Now, I'm a little biased: I love markets, and I especially love prediction markets. I, like many, view them as the best way to predict political events and a useful counter to the "politicos" on CNN that have no skin in the game.

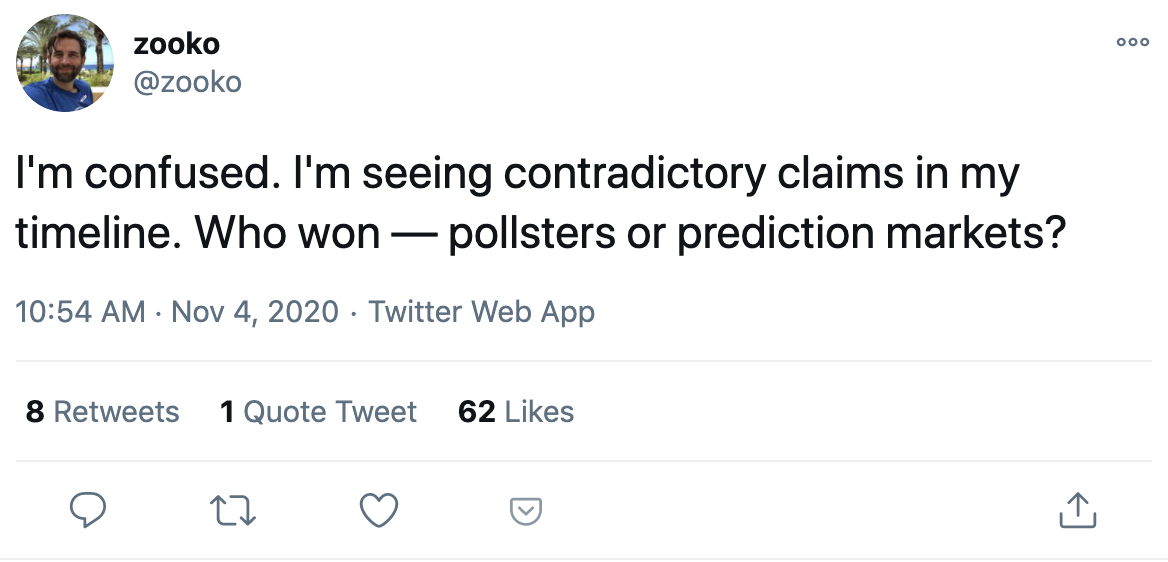

But many people seem to be confused as to whether pollsters or prediction markets were more accurate this time around.

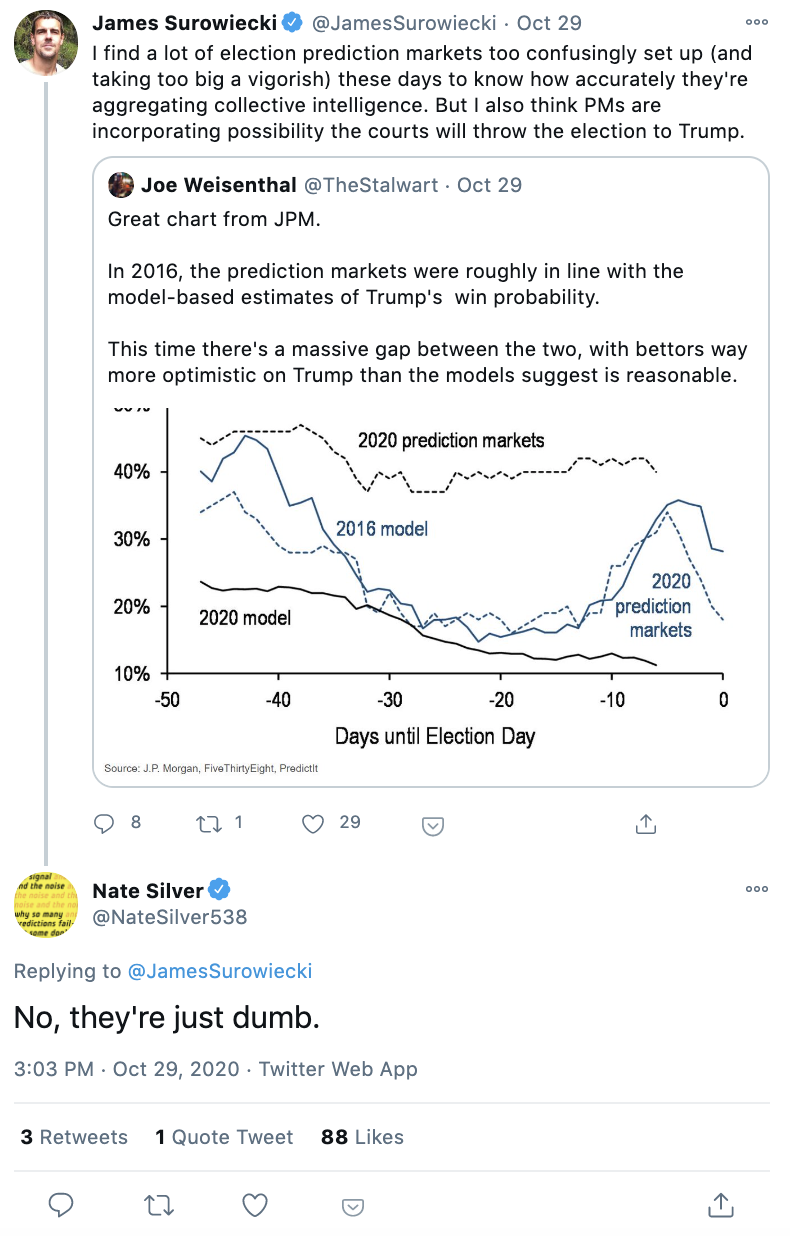

This is in no small part due to commentary by Nate Silver of 538, who has been out-front defending his prediction. Now I'm not here to pick on Nate Silver, but he really hates prediction markets. Almost as much as I love them. He's called them "competitive mansplaining," said they are populated by people with a "sophomoric knowledge of politics," and they are "a competition over what types of people suffer more from the Dunning Kruger effect" (which is a pretty harsh diss for a self-proclaimed nerd). Nate believes building political forecasting models requires relevant expertise which "almost no people" have, and that's why prediction markets have a "poor track record" (see here for a compilation of comments).

When a writer wondered why pre-election prediction markets gave Trump a better chance of winning than the polls, Nate's reply was simple: they're just dumb!

I may sound like I'm picking on Nate for no reason. But he is the country's foremost election forecasting expert, so his derision of prediction markets has a big impact on the debate. And I am actually a huge 538 and Nate Silver fan, so writing this post was not easy.

Below, I'll explain why I think prediction markets beat the polls this election and how I hope we can expand the use of prediction markets in the future.

Overview of prediction markets

First, some background: Prediction markets are really just an offshoot of betting markets. You can bet on the winner of the Pats-Jets game this weekend, so why can't you bet on the winner of the Presidential election?

But while sports betting is increasingly legalized (and booming) across the country, political prediction markets remain heavily restricted by regulation. The CFTC has jurisdiction and has all but banned most types of prediction markets in the US (including taking down the once popular Intrade).

The main exception to this is Predictit, which is the largest political prediction market today. Predictit avoids stricter scrutiny by technically being a non-profit educational project of a New Zealand university and by imposing harsh limits on trading, like a max $850 contract cap (see more here).

Predictit is the only significant player in the US, but only because US residents lack access to the larger international options like the UK-based Betfair (the world's largest online betting market) or crypto currency exchanges like FTX. Betfair alone had >$300m bet on the 2020 election.

Thankfully, I will be using a fantastic site by John Stossel which aggregates the betting markets' odds across these many options. The only exception is for state races, where I will only use Predictit (the only data source I can find).

Evaluating Success

I'm going to compare prediction markets to 538 for a few reasons: they gave Trump one of the highest probabilities of winning (more than the embarrassingly low 3% the Economist gave), they are the most prominent polling aggregator, and Nate Silver has been especially critical of prediction markets. For both, I use their final forecast before the election (Nov 2nd).

Evaluating success is not as easy as you think. We don't have the luxury of running the same election 1000 times and seeing what percent of the time each candidate wins, and we only have limited data for prediction markets compared to 538. There is also a larger debate on this topic (ex: see Taleb vs Silver) that I won't address.

Instead, I'll keep things simple. We'll first evaluate who was best at predicting the binary outcomes of the President (states included), Senate, and House. Then, we'll move beyond binary outcomes and look at a type of "calibration" for each model (not quite a traditional calibration as we only have limited data). With these two methods, we can come to an answer.

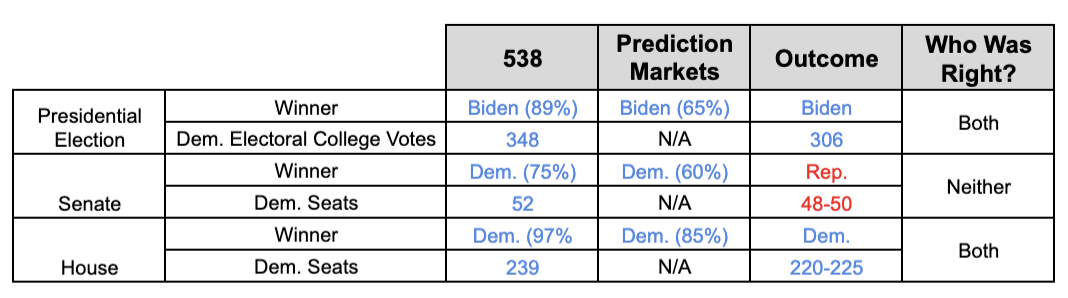

Prediction markets were closer to the mark

First, the binary win/loss outcomes: 538 and prediction markets both expected Biden to win (correct), Dems to take the Senate (probably wrong), and Dems to keep the house (correct). But for each, prediction markets assigned a higher probability of a Republican victory, which will most likely happen in the Senate after Georgia's runoff elections. And, though we don't have great prediction markets for electoral college votes, Senate, or House seats, it seems highly likely that they would have been more accurate than 538 given the prediction markets' disposition towards Trump.

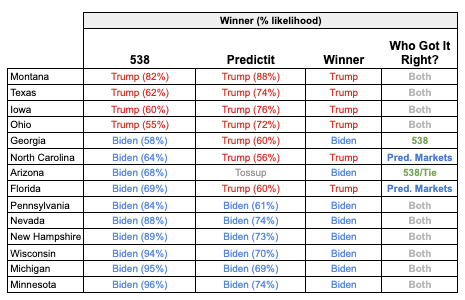

What about correctly predicting which candidate would take each state? I'll focus on the battleground states, as both 538 and prediction markets easily predicted the rest. Here, it gets little tricky: Both correctly guessed the winner in most states. But prediction markets correctly picked Trump to win NC and FL, while 538 correctly picked Biden to win GA and AZ (which prediction markets had as a toss-up). Nonetheless, given prediction markets had AZ as a tossup, I'll give them a slight edge here.

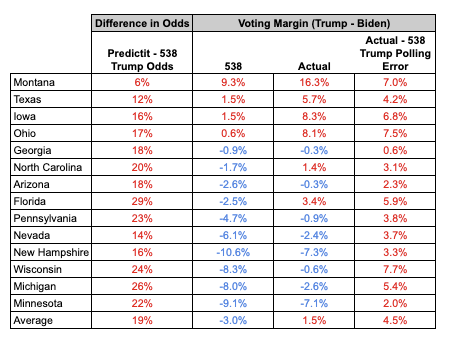

What's clear in all of this is that prediction markets correctly assumed that the polls were underestimating Republicans' true vote share in every race (presidential, senate, and house). My guess is they assumed about a 3-4% polling miss for Trump in most states, and the states with smaller polling misses were the ones it failed to correctly predict (GA: +0.6%, AZ +2.3%).

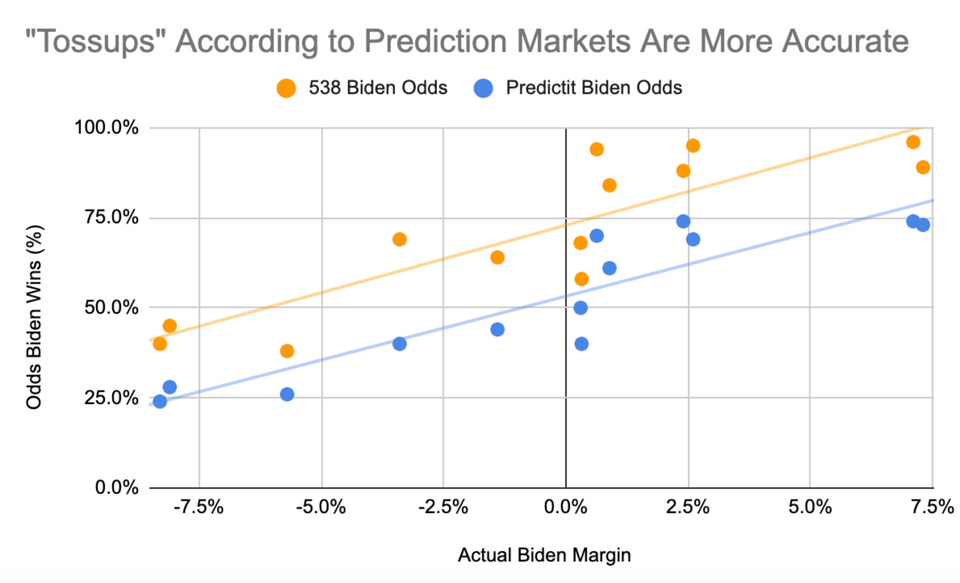

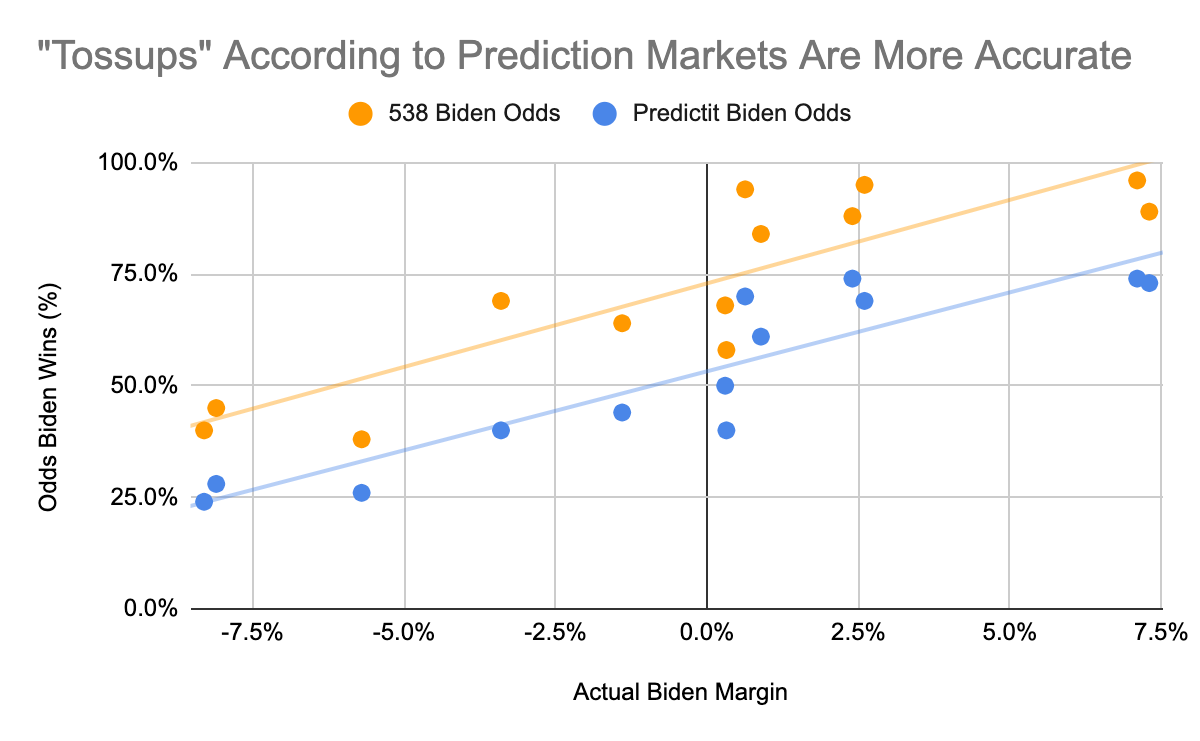

Now, let's look at each model's "calibration." Here, we can see prediction markets' "model" looks more accurate than 538. If markets predicted a race to be 50/50, those markets were roughly a tossup. For the same markets, 538 had them at 75% Biden (h/t to Arpit Gupta for this cut of the data).

So the prediction markets did as good or better in predicting the binary outcomes, correctly assumed a polling error in favor of Republicans, and generally appear better calibrated than 538's model.

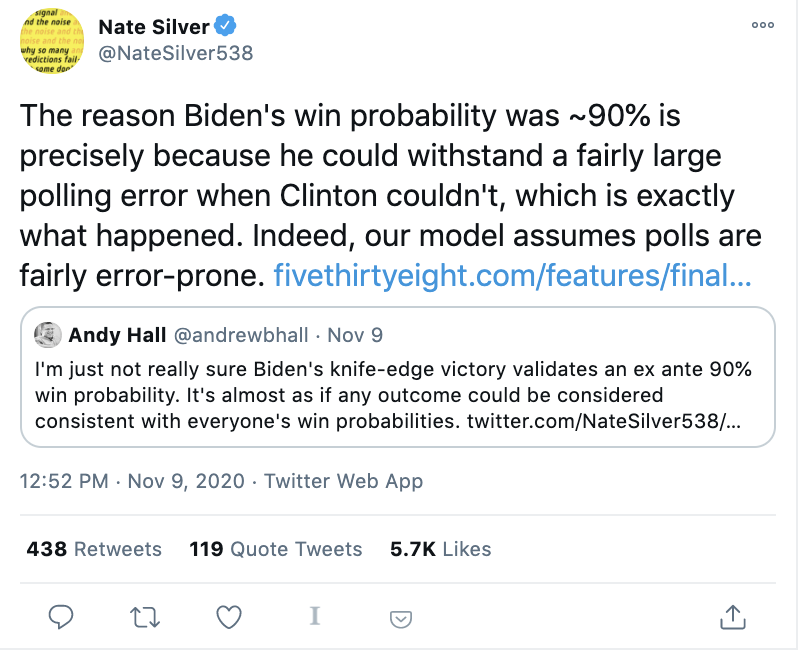

Despite the above, Nate Silver has defended 538's model. According to him, the fact that Biden won despite the large polling error is further evidence his estimate was correct.

At some level, this makes sense. Nate now estimates Biden will win the popular vote by 4.4%, a 3.6% miss relative to the polls and one of the largest presidential election polling misses in recent decades.

But his argument is not quite right. Nate assumes his previous forecast was correct with an equal chance of polling error on each side. According to Nate, no one could have predicted this polling error!

But the prediction markets did! We don't need to throw up our hands and say "who could have guessed Republicans would have outperformed the polls?" If we take the prediction markets as the best guess, then Biden really had a ~65% chance of winning, not a 90% (or 97%) chance.

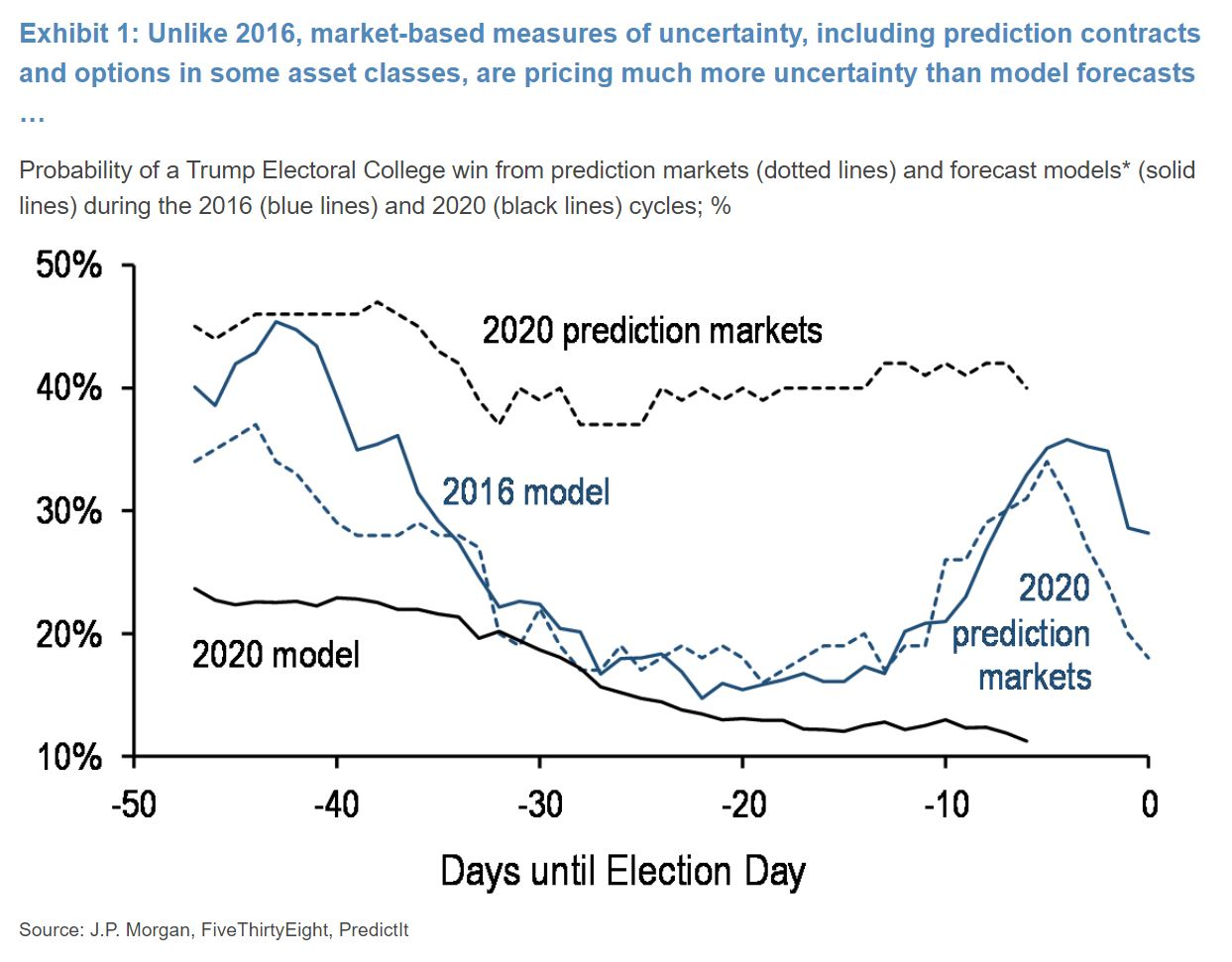

And the great irony here is that in 2016, the prediction markets seemed to trust 538 more than they should have. They largely tracked 538's Trump odds, resulting in both the markets and 538 failing to predict the (binary) winner for president. Pollsters did not account for their mistakes in 2016 and 2018, as Nate has suggested. Instead, it was actually prediction markets who accounted for pollsters' past mistakes. For a more detailed understanding of what prediction markets saw that 538 didn't, see this great piece by Arpit Gupta.

But what about the election night swings and recent developments?

I can already hear you shaking your head and saying, "Charles, what about the big swing for Trump on election night? And the fact prediction markets are still giving Trump a small chance of victory?"

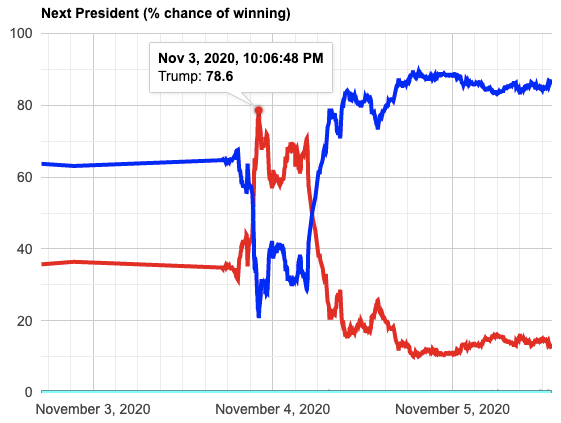

It's true prediction markets swung in favor of Trump on election night, reaching as high as 79% at 10pm and staying in Trump's favor until around 5am the next morning. The initial reason for the swing was stronger than expected results for Trump in Florida, particularly Miami, as well as early results in GA and NC looking better than expected (at least according to the NYT "needle").

In hindsight, Florida's polling error (~6% in the end) was higher than most other states so extrapolating from it was not a wise decision, and initial results for GA weren't representative.

I'm open to the idea that prediction markets "overreacted," especially given it would be in line with financial markets' over reaction to extreme events. But it's impossible to say for sure whether Trump really did have an 80% chance of winning based on the data at the time. The closest thing we have to a real-time forecast to compare to was the NYT needle, which also had Trump winning FL, GA, and NC handily (though to his credit, Nate Silver was skeptical of the market move at the time). Therefore, it's not clear to me that the swing for Trump was necessarily an "overreaction" as opposed to a reasonable assessment of the data at the time.

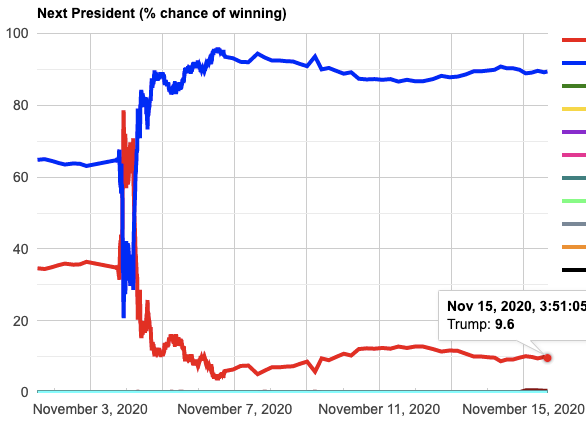

The fact that prediction markets still have Trump at meaningful odds (as of writing, Trump is still at 10%) is more perplexing to me.

I took a stab at answering this one on twitter: Predictit has restrictions on the max numbers of traders per contract and also has high fees which reduce arbitrage opportunities. Some long-shot bias may be at play here too. But ultimately, I don't think these reasons are sufficient to explain what's going on (especially given Betfair doesn't have the same restrictions as Predictit).

Then the obvious question is: as of Nov 22nd, does Donald Trump really have a 10% probability of becoming President? Either the answer is "yes", or this is one of the more compelling examples of prediction market "irrationality."

Unleash prediction markets

Despite the recent developments, I still consider this election a big win for prediction markets. Taken alone, maybe you'd wonder if prediction markets just got lucky. But it is really just another data point that adds mounting evidence to the accuracy and usefulness of political prediction markets. And we shouldn't be surprised, as any market with sufficient liquidity and money on the line should be hard to beat (just like almost all financial markets).

And yet, we can even do better! When I see "perplexing" examples like Trump's 10% odds today, or the fact that Predictit odds often add up to over 100% for a race, I don't see this as a knock against prediction markets themselves. That's like looking at a volatile, illiquid stock and saying "I guess financial markets don't work well."

Instead, it makes me even more bullish. Even at their clunky, relatively illiquid, and immature stage today, prediction markets are already outperforming pollsters and forecasters. Imagine how much better we can do if we had deregulated prediction markets where trading events was as common and easy as trading stocks.

What does the future bring? First, I hope for less name calling and a more constructive debate around prediction markets. I hope Nate Silver can reconsider the value of prediction markets and help incorporate them even more in his analysis.

On the startup/companies side, I'll have more to say in another blog post. But I'm particularly excited about solutions in crypto, like Augur, and other startups that are going a more traditional route of getting CFTC approval. There is a general societal trend towards legalized gambling/betting, so here's to hoping for a brighter future for prediction markets too.

Thanks to Rob Dearborn, Layla Malamut and Adam Stein for reading drafts of this post.